AI Coding Assistants Don’t Understand Your Code: LSP, SCIP, and Real Code Intelligence

If you've spent any time with AI coding assistants, you've probably experienced the moment where everything falls apart...

Why AI Coding Assistants Don't Actually Understand Your Code

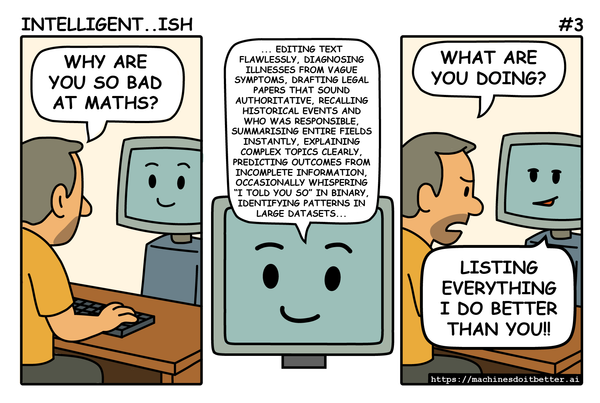

If you've spent any time with AI coding assistants, you've probably experienced the moment where everything falls apart. The model confidently suggests a function that doesn't exist. It imports a module you deleted three months ago. It refactors code in a way that completely ignores how your architecture actually works.

The dirty secret of AI-assisted development is that these tools don't understand your codebase. They see the file you have open. Maybe a few related files if you're lucky. But the mental model that you carry around, how components connect, why that weird naming convention exists, what broke last time someone touched the authentication layer, none of that transfers.

The Context Window Illusion

When you paste code into an LLM, it processes tokens. It's very good at pattern matching within those tokens. But a typical codebase has thousands of files, complex dependency graphs, and implicit relationships that only become clear when you trace execution paths.

Current AI assistants handle this through something akin to keyhole surgery – they peer through a narrow opening and make educated guesses about what surrounds it. Sometimes those guesses are remarkably good. Often, they're not.

The problem gets worse as projects grow. A 50-file project might fit mostly within context limits. A production codebase with hundreds of thousands of lines? The AI is essentially working blind, inferring structure from whatever fragments you feed it.

What Would Real Code Intelligence Look Like?

Compilers and IDEs solved this problem decades ago. When you hit "go to definition" in your editor, it doesn't guess, it knows. It has parsed the entire codebase, built symbol tables, resolved types, and constructed a complete map of what exists and how it connects.

The technologies that power this are well-established. The Language Server Protocol (LSP) provides real-time code intelligence, which includes completions, diagnostics, and symbol resolution. SCIP (Source Code Intelligence Protocol) offers a standardised format for code graphs that can be indexed and queried across entire repositories.

The question is: why aren't AI assistants using these?

Actually, some now are. In December 2025, Anthropic shipped native LSP support in Claude Code (version 2.0.74). It's a significant move – the tool now has access to go-to-definition, find-references, and hover documentation for 11 languages, including Python, TypeScript, Rust, and Go.

The implications are substantial. As one developer put it, Claude Code now "sees like a software architect" rather than doing sophisticated grep. When the agent needs to understand where a function is defined or how a type flows through the system, it can query the same language servers your IDE uses instead of inferring from text patterns.

But the integration is still early. LSP requires file:line:column positioning, which is awkward for agentic usage – you can't simply ask "Where is Foo.bar defined?" without first locating a reference to it. José Valim, creator of Elixir, noted this friction shortly after the release. The gap between "LSP exists" and "LSP works seamlessly for AI agents" turns out to be non-trivial.

And LSP itself has limits. It's designed for real-time editor integration, not deep codebase analysis. SCIP goes further – it can index entire repositories and answer questions about symbol relationships across the full dependency graph. So far, Claude Code hasn't shipped SCIP support, leaving room for tools that provide richer semantic understanding.

The Memory Problem

There's a second dimension that pure code analysis doesn't solve: persistence.

Human developers accumulate project knowledge over time. You remember that the caching layer is fragile. You know that the CEO's pet feature can't be touched. You've internalised which patterns the team prefers and which experiments failed.

AI assistants start fresh every session. Each conversation is year zero. You can paste context and write elaborate system prompts, but you're essentially re-teaching the same lessons repeatedly.

This suggests that real code intelligence for AI needs two things working together: semantic understanding of the codebase as it exists now, and persistent memory of project knowledge that accumulates over time.

The Emerging Tooling Layer

A quiet infrastructure race is happening beneath the surface of AI coding tools. Various projects are building what you might call "code intelligence middleware" – systems that sit between the raw codebase and the AI, providing structured access to symbols, relationships, and project history.

Some wrap LSP servers in MCP tools. Others build SCIP indexes for deeper cross-repository analysis. A few are experimenting with memory backends that persist context across sessions.

The approaches vary, but they share a thesis: the AI itself doesn't need to understand code structure. It needs tools that understand code structure and can answer questions on demand.

With Anthropic now shipping native LSP, part of this middleware layer is getting absorbed into the base tooling. The interesting question is what remains differentiated: deeper SCIP-based analysis, persistent project memory, and cross-repository knowledge graphs. The "base model eats the business model" dynamic is real, but there's still meaningful territory beyond what ships out of the box.

Does It Actually Help?

The honest answer: it depends.

For navigational tasks – finding definitions, tracing references, and understanding type hierarchies – tool-augmented AI performs dramatically better than pure LLM inference. It's the difference between guessing and knowing.

For higher-level tasks – architecture decisions, refactoring strategies, and debugging complex issues – the picture is murkier. Semantic code data provides better grounding, but the AI's reasoning still matters. Better inputs help, but they don't fix fundamental limitations in how models understand software systems.

The most interesting gains seem to come from persistence. When an AI assistant can recall that you tried a particular approach last week and it failed, or that a specific module has ownership constraints, it stops making the same suggestions repeatedly. The conversation builds on itself rather than starting over.

What's Missing

Current approaches still have significant gaps.

Runtime behaviour remains mostly invisible. Static analysis tells you what code says, not what it does. Performance characteristics, failure modes, and actual usage patterns – these require instrumentation that few AI tools incorporate.

Cross-repository knowledge is limited. Most tools focus on single codebases. Real development often involves multiple repos, internal packages, and external dependencies that interact in complex ways. SCIP was designed for this, but adoption in AI tooling remains sparse.

And there's a cold start problem. Building semantic indexes takes time. For large codebases, the initial analysis can take minutes or hours. Most developers won't wait that long before giving up.

Where This Goes

Claude Code shipping native LSP feels like a threshold moment. The capability that was previously bolted on through third-party tools is now built in. The moat that various startups were digging just got significantly shallower.

My guess is that deeper code intelligence becomes table stakes within a year. The question shifts to what's built on top: better memory systems, richer analysis through SCIP, cross-repo understanding, and runtime integration.

The more interesting question is whether this shifts how we think about AI in development. Today, we treat coding assistants as smart autocomplete – they suggest, we review. With real codebase understanding, they could become something closer to junior developers who actually know the project.

That's a meaningful difference. It changes what tasks you can delegate, how you structure prompts, and ultimately how AI fits into development workflows.

Whether the current generation of tools can get there, or whether it requires fundamental advances in how models reason about code, remains an open question. The infrastructure is being built. What runs on top of it is still being figured out.

One project taking this further is CKB (codeknowledge.dev), an open-source toolkit that provides 80+ MCP tools specifically designed for AI code intelligence. Built by developers who ran into these limitations while working on their own projects, CKB combines both LSP and SCIP to give AI assistants semantic understanding that goes beyond what's currently shipping in mainstream tools. The differentiator is the combination: real-time LSP queries for navigation, SCIP indexing for deep cross-file analysis, and persistent memory backends that let project knowledge accumulate across sessions. It's the kind of infrastructure layer that sits between "Claude Code has LSP now" and "the AI actually understands my codebase" – addressing exactly the gaps that native LSP support doesn't yet cover.